From Box to Building—How Open Compute Is Rewiring the AI Factory

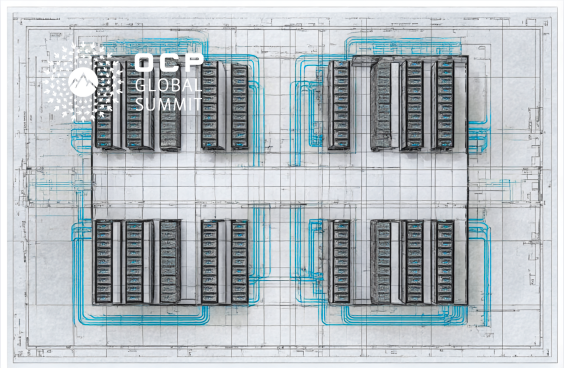

The unit of design for data centers and AI factories has pivoted from system and rack to rack and data hall. It’s how this industry will reconcile physics (power and heat), timelines (time-to-online), and diversity (multi-vendor systems) in the AI era.

Perhaps nowhere is this shift more evident than on the show floor of the Open Compute Project Foundation’s (OCP’s) 2025 global summit in San Jose this week. OCP’s latest news, along with a litany of exhibitor and partner announcements, land squarely in this rack-first world. The OCP community is leaning into an “Open Data Center for AI” framework—codifying common physical and operational baselines so racks, pods, and clusters can be assembled and operated with far less bespoke engineering.

In this OCP 2025 industry spotlight, I’m going to talk about the innovations that stood out to me and share my take on the next steps I believe this industry needs to take in lockstep.

Silicon Steps Up: Governance and Chiplets

With AMD, Arm, and NVIDIA now on the OCP Board—represented by Robert Hormuth (AMD), Mohamed Awad (Arm), and Rob Ober (NVIDIA)—we know that the future of hyperscale is heterogeneous. For Arm, that governance role pairs with its Foundation Chiplet System Architecture (FCSA) effort aimed at a vendor-neutral spec for interoperable chiplets. In a heterogeneous era, a common chiplet language becomes the on-ramp to modular silicon roadmaps—and ultimately to more fungible racks and faster deployment.

Intel’s Rack-Scale Vision Shows Up on the Floor

A decade ago, Intel’s Rack Scale Design (RSD) pitched a composable, disaggregated rack where compute, storage, and accelerators are pooled and composed by software using open, Redfish-based APIs. The goal: upgrade resources independently, raise utilization, and manage at the pod level—not the node. That picture read like science fiction in 2015; in 2025, it looks a lot like the OCP show floor – open firmware: check; rack and multi-rack design points: check; integration with power...are you kidding me?...check. Read on!

Helios: AMD’s Rack Is the Product

AMD used OCP to stop talking parts and start selling a rack: Helios. Built on Meta’s Open Rack Wide (ORW) spec, Helios aligns EPYC head-nodes, Instinct accelerators, ROCm, and networking into a serviceable, buyable rack system—72 MI450s per rack with an HBM footprint that outmuscles Vera Rubin-class designs on total memory. Oracle leaning in (50K MI450s slated for Helios-based clusters) is the market tell: rack-as-a-SKU is no longer hypothetical.

This is the cleanest proof yet that the hyperscale playbook—standardized mechanics, consistent service windows, and software-first ops—has crossed into open, multi-vendor racks. Let’s go team Instinct!

Flex: Pre-Engineered Building Blocks for the AI Factory

Flex’s OCP-timed platform is an emblem of the shift. The company is productizing what operators actually fight with at scale: prefabricated power pods and skids, 1 MW rack designs, rack-level coolant distribution, and capacitive energy buffering to tame synchronized AI load spikes. Flex positions it as a faster path from design to energized racks—an argument that resonates with any operator trying to compress site schedules without inviting risk. The bigger point: when the rack becomes the product, integration, commissioning, and serviceability must be first-class features.

GIGAPOD: Turnkey NVIDIA Racks at Scale

Giga Computing (GIGABYTE) is shipping GIGAPOD clusters—multi-rack, turnkey NVIDIA HGX (Hopper/Blackwell) systems offered in air-cooled and liquid-cooled topologies. The point isn’t one server; it’s a pre-integrated pod where nine air-cooled racks (or five liquid racks) behave like a single AI system with known thermals, service flows, and performance envelopes—exactly the "rack as product" pattern operators keep asking for.

MiTAC: You Want to Rack it? We can Stack It.

Just when you think we are done, enter MiTAC. This confluence of Tyan and the Intel systems businesses has the heritage and technical chops to deliver what operators want, and their OCP 21” rack, sidled up with a standard 19” rack spoke volumes about delivering infrastructure to customer requirements. What’s more, they offer CPU and GPU configurations to serve every palatte, blended with a leading open firmware solution to make integration easy.

Unique innovation in the rack complemented these rack scale innovations. Here are a few of the highlights.

Celestica’s 1.6-TbE Class Switches Underline “Rack as Product”

Celestica’s new switch family advances bandwidth and thermal design for AI/ML clusters with open-rack friendly mechanics and cooling options. It’s the kind of network gear built to live as a rack component—aligned with power, airflow/liquid paths, and service models—rather than a freestanding device. When operators talk about standardizing deployment at the rack/pod level, this is what they mean.

ASRock Rack + ZutaCore: Waterless Two-Phase DTC for AI Factory Racks

ASRock Rack is showcasing an HGX B300 platform that integrates ZutaCore’s waterless, two-phase direct-to-chip cooling—dielectric fluid, direct heat removal, serviceable design. The aim is simple: enable higher rack densities without committing the building to a single facility-water strategy. For brownfield deployments or sites with water constraints, that optionality matters. ASRock also has an air-cooled B300 variant to meet operators where their rooms are today.

Accelsius: Multi-Rack Two-Phase, Packaged for Speed

Accelsius’ latest CDU solution targets multi-rack direct-to-chip deployments, turning two-phase cooling into something operators can drop in alongside existing plant gear. The value prop is commissioning predictability and density headroom—two things in short supply on accelerated build schedules.

Cold-Plate & Process Innovations

We’re also seeing upstream manufacturing advances that change the thermal math—new plate geometries and additive processes that increase heat flux at lower flow rates. For facilities teams, that can translate into smaller pumps, simpler loops, and more room for the rest of the rack kit.

Why This Matters Now

Focusing on what actually moves projects: deployability, serviceability, and schedule certainty, these announcements matter because they turn “open” from a philosophy into a repeatable path—standardized racks, power and liquid interfaces, network gear designed as rack components, and thermal advances that reduce the number of custom steps between CAD and capacity.

Deployment velocity, not theory. Flex’s pre-engineered power/cooling pods, 1 MW racks, and rack-level CDUs turn “integration” into a repeatable process—shortening the path from CAD to energized capacity.

Density without a rebuild. ASRock Rack + ZutaCore (waterless two-phase) and Accelsius (multi-rack two-phase CDU) give operators liquid options that fit existing plants and brownfield realities—freeing space/flow for what matters inside the rack.

Rack-native networking. Celestica’s 1.6 TbE/102.4T-class switches are engineered as components of the rack, aligning thermals, power domains, and service windows so pods stay installable and maintainable.

Platforms > parts. AMD’s Helios shows vendors shipping serviceable rack systems, not just nodes—reducing onsite glue work and clarifying ownership across CPU, accelerator, interconnect, and service.

Upstream thermal gains. New cold-plate geometries and additive processes raise heat-flux at lower flow rates—translating to smaller pumps, simpler loops, and more payload room per rack.

Circular racks, not just circular parts. Not every AI program needs bleeding-edge silicon Day 1. Rack Renew (a Sims Lifecycle Services subsidiary) is packaging recommissioned OCP gear—think Tioga Pass sleds back into ORV2 racks with busbar power—for rapid, budget-sane deployments. Their process adds firmware baselines, multi-day burn-in, and BOM documentation; customers see 40–60% capex relief and reuse racks with up to ~15% power-delivery efficiency gains from the OCP busbar versus traditional PDUs. It’s a pragmatic on-ramp that complements new AI halls with affordable general compute, storage tiers, and edge fleets.

TechArena Take

Open only matters if it reduces steps and risk. What stood out on Days 3 and 4 was how many vendors are productizing the gaps that slow AI halls: Flex’s platformization of power/cooling, Accelsius’ multi-rack two-phase, ASRock Rack + ZutaCore’s waterless DLC, AMD’s rack-scale Helios, and upstream cold-plate advances that ease the facility side.

The next mile: the industry needs integration, not aspiration. Publish reference rack BOMs, commissioning checklists (power, liquid, network), and baseline telemetry/firmware schemas that travel across vendors. If we align on rack mechanics, service clearances, power rails, coolant interfaces, and observability, multi-vendor pods become selectable products—not projects.

With all this innovation, one question tops my list: how do the companies embracing open compute integrate their designs—cleanly, repeatedly, and at speed? That’s my biggest takeaway from OCP 2025, and it’s what I’ll be pressing in upcoming TechArena podcasts.

The unit of design for data centers and AI factories has pivoted from system and rack to rack and data hall. It’s how this industry will reconcile physics (power and heat), timelines (time-to-online), and diversity (multi-vendor systems) in the AI era.

Perhaps nowhere is this shift more evident than on the show floor of the Open Compute Project Foundation’s (OCP’s) 2025 global summit in San Jose this week. OCP’s latest news, along with a litany of exhibitor and partner announcements, land squarely in this rack-first world. The OCP community is leaning into an “Open Data Center for AI” framework—codifying common physical and operational baselines so racks, pods, and clusters can be assembled and operated with far less bespoke engineering.

In this OCP 2025 industry spotlight, I’m going to talk about the innovations that stood out to me and share my take on the next steps I believe this industry needs to take in lockstep.

Silicon Steps Up: Governance and Chiplets

With AMD, Arm, and NVIDIA now on the OCP Board—represented by Robert Hormuth (AMD), Mohamed Awad (Arm), and Rob Ober (NVIDIA)—we know that the future of hyperscale is heterogeneous. For Arm, that governance role pairs with its Foundation Chiplet System Architecture (FCSA) effort aimed at a vendor-neutral spec for interoperable chiplets. In a heterogeneous era, a common chiplet language becomes the on-ramp to modular silicon roadmaps—and ultimately to more fungible racks and faster deployment.

Intel’s Rack-Scale Vision Shows Up on the Floor

A decade ago, Intel’s Rack Scale Design (RSD) pitched a composable, disaggregated rack where compute, storage, and accelerators are pooled and composed by software using open, Redfish-based APIs. The goal: upgrade resources independently, raise utilization, and manage at the pod level—not the node. That picture read like science fiction in 2015; in 2025, it looks a lot like the OCP show floor – open firmware: check; rack and multi-rack design points: check; integration with power...are you kidding me?...check. Read on!

Helios: AMD’s Rack Is the Product

AMD used OCP to stop talking parts and start selling a rack: Helios. Built on Meta’s Open Rack Wide (ORW) spec, Helios aligns EPYC head-nodes, Instinct accelerators, ROCm, and networking into a serviceable, buyable rack system—72 MI450s per rack with an HBM footprint that outmuscles Vera Rubin-class designs on total memory. Oracle leaning in (50K MI450s slated for Helios-based clusters) is the market tell: rack-as-a-SKU is no longer hypothetical.

This is the cleanest proof yet that the hyperscale playbook—standardized mechanics, consistent service windows, and software-first ops—has crossed into open, multi-vendor racks. Let’s go team Instinct!

Flex: Pre-Engineered Building Blocks for the AI Factory

Flex’s OCP-timed platform is an emblem of the shift. The company is productizing what operators actually fight with at scale: prefabricated power pods and skids, 1 MW rack designs, rack-level coolant distribution, and capacitive energy buffering to tame synchronized AI load spikes. Flex positions it as a faster path from design to energized racks—an argument that resonates with any operator trying to compress site schedules without inviting risk. The bigger point: when the rack becomes the product, integration, commissioning, and serviceability must be first-class features.

GIGAPOD: Turnkey NVIDIA Racks at Scale

Giga Computing (GIGABYTE) is shipping GIGAPOD clusters—multi-rack, turnkey NVIDIA HGX (Hopper/Blackwell) systems offered in air-cooled and liquid-cooled topologies. The point isn’t one server; it’s a pre-integrated pod where nine air-cooled racks (or five liquid racks) behave like a single AI system with known thermals, service flows, and performance envelopes—exactly the "rack as product" pattern operators keep asking for.

MiTAC: You Want to Rack it? We can Stack It.

Just when you think we are done, enter MiTAC. This confluence of Tyan and the Intel systems businesses has the heritage and technical chops to deliver what operators want, and their OCP 21” rack, sidled up with a standard 19” rack spoke volumes about delivering infrastructure to customer requirements. What’s more, they offer CPU and GPU configurations to serve every palatte, blended with a leading open firmware solution to make integration easy.

Unique innovation in the rack complemented these rack scale innovations. Here are a few of the highlights.

Celestica’s 1.6-TbE Class Switches Underline “Rack as Product”

Celestica’s new switch family advances bandwidth and thermal design for AI/ML clusters with open-rack friendly mechanics and cooling options. It’s the kind of network gear built to live as a rack component—aligned with power, airflow/liquid paths, and service models—rather than a freestanding device. When operators talk about standardizing deployment at the rack/pod level, this is what they mean.

ASRock Rack + ZutaCore: Waterless Two-Phase DTC for AI Factory Racks

ASRock Rack is showcasing an HGX B300 platform that integrates ZutaCore’s waterless, two-phase direct-to-chip cooling—dielectric fluid, direct heat removal, serviceable design. The aim is simple: enable higher rack densities without committing the building to a single facility-water strategy. For brownfield deployments or sites with water constraints, that optionality matters. ASRock also has an air-cooled B300 variant to meet operators where their rooms are today.

Accelsius: Multi-Rack Two-Phase, Packaged for Speed

Accelsius’ latest CDU solution targets multi-rack direct-to-chip deployments, turning two-phase cooling into something operators can drop in alongside existing plant gear. The value prop is commissioning predictability and density headroom—two things in short supply on accelerated build schedules.

Cold-Plate & Process Innovations

We’re also seeing upstream manufacturing advances that change the thermal math—new plate geometries and additive processes that increase heat flux at lower flow rates. For facilities teams, that can translate into smaller pumps, simpler loops, and more room for the rest of the rack kit.

Why This Matters Now

Focusing on what actually moves projects: deployability, serviceability, and schedule certainty, these announcements matter because they turn “open” from a philosophy into a repeatable path—standardized racks, power and liquid interfaces, network gear designed as rack components, and thermal advances that reduce the number of custom steps between CAD and capacity.

Deployment velocity, not theory. Flex’s pre-engineered power/cooling pods, 1 MW racks, and rack-level CDUs turn “integration” into a repeatable process—shortening the path from CAD to energized capacity.

Density without a rebuild. ASRock Rack + ZutaCore (waterless two-phase) and Accelsius (multi-rack two-phase CDU) give operators liquid options that fit existing plants and brownfield realities—freeing space/flow for what matters inside the rack.

Rack-native networking. Celestica’s 1.6 TbE/102.4T-class switches are engineered as components of the rack, aligning thermals, power domains, and service windows so pods stay installable and maintainable.

Platforms > parts. AMD’s Helios shows vendors shipping serviceable rack systems, not just nodes—reducing onsite glue work and clarifying ownership across CPU, accelerator, interconnect, and service.

Upstream thermal gains. New cold-plate geometries and additive processes raise heat-flux at lower flow rates—translating to smaller pumps, simpler loops, and more payload room per rack.

Circular racks, not just circular parts. Not every AI program needs bleeding-edge silicon Day 1. Rack Renew (a Sims Lifecycle Services subsidiary) is packaging recommissioned OCP gear—think Tioga Pass sleds back into ORV2 racks with busbar power—for rapid, budget-sane deployments. Their process adds firmware baselines, multi-day burn-in, and BOM documentation; customers see 40–60% capex relief and reuse racks with up to ~15% power-delivery efficiency gains from the OCP busbar versus traditional PDUs. It’s a pragmatic on-ramp that complements new AI halls with affordable general compute, storage tiers, and edge fleets.

TechArena Take

Open only matters if it reduces steps and risk. What stood out on Days 3 and 4 was how many vendors are productizing the gaps that slow AI halls: Flex’s platformization of power/cooling, Accelsius’ multi-rack two-phase, ASRock Rack + ZutaCore’s waterless DLC, AMD’s rack-scale Helios, and upstream cold-plate advances that ease the facility side.

The next mile: the industry needs integration, not aspiration. Publish reference rack BOMs, commissioning checklists (power, liquid, network), and baseline telemetry/firmware schemas that travel across vendors. If we align on rack mechanics, service clearances, power rails, coolant interfaces, and observability, multi-vendor pods become selectable products—not projects.

With all this innovation, one question tops my list: how do the companies embracing open compute integrate their designs—cleanly, repeatedly, and at speed? That’s my biggest takeaway from OCP 2025, and it’s what I’ll be pressing in upcoming TechArena podcasts.