OCP EMEA Summit Highlights: The Race to 1MW IT Loads per Rack

At the recent Open Compute Project Foundation (OCP) Summit in Dublin, one of the major announcements was Google’s unveiling of the 1 megawatt (MW) IT Rack. As AI continues to disrupt the IT landscape, it is also pushing the boundaries of the physical infrastructure in the data center. And it’s not just power — it’s the cooling, space and mechanical engineering required to support all this. In this article, I’ll outline some of the observations and insights I took from the regional OCP summit with regard to rack and power.

Status Quo

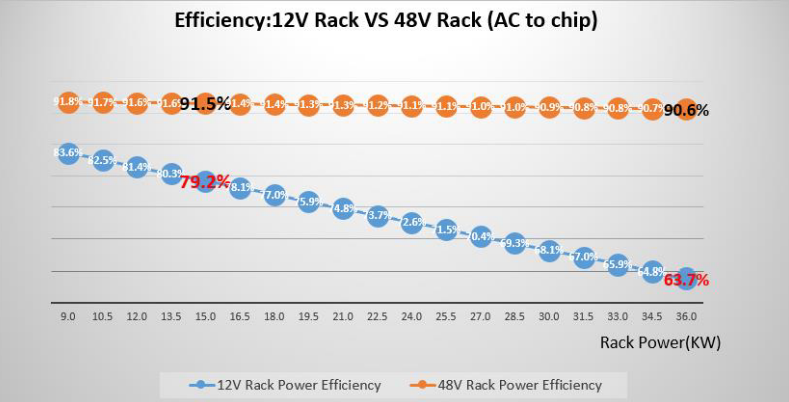

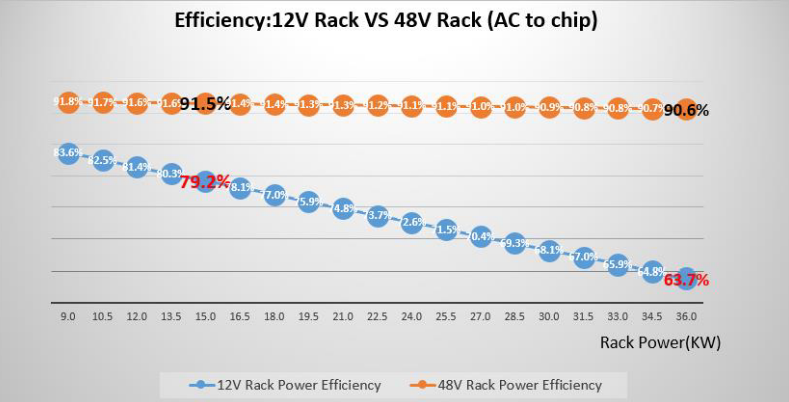

As a quick high-level baseline, let’s start with the status quo: the OCP rack specification is often referred to as “Open Rack,” followed by a version identifier. The current mainstream version you’ll find in most data centers is Open Rack Version 2 (ORV2). This version is powered by a 12-volt busbar. Unlike traditional 19" racks, where every piece of equipment has its own power connections and power supplies, the OCP racks and equipment are powered by a centralized busbar, which provides more efficient power delivery, saving 15% to 30% of power per rack! The ORV2 busbar runs at 12 volts, which, in this day and age, is challenged to support the more demanding IT workloads.

As such, ORV2 is in full transition to ORV3, which Google introduced in 2016. Version 3 increased the busbar voltage from 12 to 48 volts. This higher voltage allowed for more power to be delivered to the rack equipment, supporting compute-intensive workloads and the early AI accelerators.

In addition to the increased 18 to 36 kilowatts (kW) of power, the ORV3 rack also brought new connectors and facilitated liquid cooling via optional rear-mounted manifolds, and it offers compatibility with 19" racks through adapters.

However, as machine learning and AI development accelerated, and as core counts in xPUs (GPUs/CPUs/tensor processing units) started driving the power density per rack to new heights, a new power and cooling architecture was required. The hyperscalers and the OCP community responded with a new iteration called the ORV3 HPR configuration, which pushed power capacity beyond 92 kW per rack, with projected supported loads of up to 140 kW. One of the main differences between the standard ORV3 and the ORV3 HPR racks is a deeper busbar. In the HPR configuration, the busbar is extended and deepened to support this capacity, while remaining compatible with standard ORV3 equipment.

This was pretty much the status quo until the Regional Summit 2025, when Google, together with Meta, Microsoft and the OCP, announced they are working on 0.5 spec for Mount Diablo, which will outline the next jump in rack and power architecture. The specification will detail what power delivery will look like when it jumps from 48 volts to 400 volts, supporting a staggering 1 MW of IT load per rack.

ORV3 HPR Next

The need to support these types of capacities drives the evolution of AI. Typical enterprise and cloud workloads still fit well within the ORV3 power envelope, but hyperscalers have realized that supporting AI infrastructure in a single rack is quickly becoming unrealistic due to the power density. Ultimately, the solution lies in separating power and compute into disctinct racks, which we’ll refer to as “disaggregate power rack” going forward. At the OCP Summit, Meta took the stage for the first time to discuss ORV3 HPR Next and laid out a roadmap, which included three scopes of projects. The following information is directly from their slides at OCP Summit:

Each of these projects will increase the power capabilities of the racks to support next-generation AI workloads, but they will also come with their own architectural changes. ORV3 HPR V1 and V2 will still be singular rack designs with upgraded power supply units. However, to support the increased power delivery on the busbar, a new water-cooled version is required to keep the temperature rise within the max of 30°C T-rise as specified. The following information is directly from their slides at OCP Summit:

The first implementation of the liquid-cooled busbar was on display at the OCP showcase by TE Connectivity. The current busbar taps out at about 120 to 140 kW; applying liquid cooling to the busbar extends the operating range to 750 kW! Beyond that, design changes such as a deeper busbar with even more cooling plates will enable future scalability.

The first segregation of power and compute is when we scale beyond 200 kW capacity.

Initially, in V3, there will be a vertical busbar, which in V4 will be replaced by power cables to minimize current losses. Clearly, this work is not the current priority for most tier 2 data centers, as these volumes and workloads are primarily the domain of the hyperscalers. But they are a bellwether of things to come.

As power capabilities grow, so too must their cooling capacity. Liquid cooling has emerged as the solution to meet this challenge.

According to this piece by Google Cloud, water can transport approximately 4,000 times more heat per unit volume than air for a given temperature change, while the thermal conductivity of water is roughly 30 times greater than air.

A coolant distribution unit, or CDU for short, is a system that circulates liquid coolant to remove heat from data center equipment. These solutions scale as the power capabilities of the rack scale. Google shared a picture of the fourth-generation CDU, which has redundant heat exchanger pumps. The fifth generation of this CDU, codenamed Project Deschutes, will be contributed to the OCP later this year to accelerate the adoption of liquid cooling at scale!

Now, there are many details and nuances to all of this. The upcoming Mount Diablo specification will unveil more detail, and if this is close to heart for you, then I would encourage you to join the monthly OCP Power & Rack community calls to listen in or actively partake! Also, consider joining the next OCP summit, as it’s such a great place to keep a pulse on industry developments.

Rarely are hyperscalers so open about their designs and plans, and it’s a chance to connect with expert engineers and startups alike. The data center is transforming to cope with the massive disruption of AI.

While this is all very exciting, I would be remiss not to mention that the OCP community is also starting to actively track the requirements for quantum computing, which, currently, is hard to even define in a rack standard, but requires a more holistic approach and is yet another challenge for the data center to tackle in the near future!

At the recent Open Compute Project Foundation (OCP) Summit in Dublin, one of the major announcements was Google’s unveiling of the 1 megawatt (MW) IT Rack. As AI continues to disrupt the IT landscape, it is also pushing the boundaries of the physical infrastructure in the data center. And it’s not just power — it’s the cooling, space and mechanical engineering required to support all this. In this article, I’ll outline some of the observations and insights I took from the regional OCP summit with regard to rack and power.

Status Quo

As a quick high-level baseline, let’s start with the status quo: the OCP rack specification is often referred to as “Open Rack,” followed by a version identifier. The current mainstream version you’ll find in most data centers is Open Rack Version 2 (ORV2). This version is powered by a 12-volt busbar. Unlike traditional 19" racks, where every piece of equipment has its own power connections and power supplies, the OCP racks and equipment are powered by a centralized busbar, which provides more efficient power delivery, saving 15% to 30% of power per rack! The ORV2 busbar runs at 12 volts, which, in this day and age, is challenged to support the more demanding IT workloads.

As such, ORV2 is in full transition to ORV3, which Google introduced in 2016. Version 3 increased the busbar voltage from 12 to 48 volts. This higher voltage allowed for more power to be delivered to the rack equipment, supporting compute-intensive workloads and the early AI accelerators.

In addition to the increased 18 to 36 kilowatts (kW) of power, the ORV3 rack also brought new connectors and facilitated liquid cooling via optional rear-mounted manifolds, and it offers compatibility with 19" racks through adapters.

However, as machine learning and AI development accelerated, and as core counts in xPUs (GPUs/CPUs/tensor processing units) started driving the power density per rack to new heights, a new power and cooling architecture was required. The hyperscalers and the OCP community responded with a new iteration called the ORV3 HPR configuration, which pushed power capacity beyond 92 kW per rack, with projected supported loads of up to 140 kW. One of the main differences between the standard ORV3 and the ORV3 HPR racks is a deeper busbar. In the HPR configuration, the busbar is extended and deepened to support this capacity, while remaining compatible with standard ORV3 equipment.

This was pretty much the status quo until the Regional Summit 2025, when Google, together with Meta, Microsoft and the OCP, announced they are working on 0.5 spec for Mount Diablo, which will outline the next jump in rack and power architecture. The specification will detail what power delivery will look like when it jumps from 48 volts to 400 volts, supporting a staggering 1 MW of IT load per rack.

ORV3 HPR Next

The need to support these types of capacities drives the evolution of AI. Typical enterprise and cloud workloads still fit well within the ORV3 power envelope, but hyperscalers have realized that supporting AI infrastructure in a single rack is quickly becoming unrealistic due to the power density. Ultimately, the solution lies in separating power and compute into disctinct racks, which we’ll refer to as “disaggregate power rack” going forward. At the OCP Summit, Meta took the stage for the first time to discuss ORV3 HPR Next and laid out a roadmap, which included three scopes of projects. The following information is directly from their slides at OCP Summit:

Each of these projects will increase the power capabilities of the racks to support next-generation AI workloads, but they will also come with their own architectural changes. ORV3 HPR V1 and V2 will still be singular rack designs with upgraded power supply units. However, to support the increased power delivery on the busbar, a new water-cooled version is required to keep the temperature rise within the max of 30°C T-rise as specified. The following information is directly from their slides at OCP Summit:

The first implementation of the liquid-cooled busbar was on display at the OCP showcase by TE Connectivity. The current busbar taps out at about 120 to 140 kW; applying liquid cooling to the busbar extends the operating range to 750 kW! Beyond that, design changes such as a deeper busbar with even more cooling plates will enable future scalability.

The first segregation of power and compute is when we scale beyond 200 kW capacity.

Initially, in V3, there will be a vertical busbar, which in V4 will be replaced by power cables to minimize current losses. Clearly, this work is not the current priority for most tier 2 data centers, as these volumes and workloads are primarily the domain of the hyperscalers. But they are a bellwether of things to come.

As power capabilities grow, so too must their cooling capacity. Liquid cooling has emerged as the solution to meet this challenge.

According to this piece by Google Cloud, water can transport approximately 4,000 times more heat per unit volume than air for a given temperature change, while the thermal conductivity of water is roughly 30 times greater than air.

A coolant distribution unit, or CDU for short, is a system that circulates liquid coolant to remove heat from data center equipment. These solutions scale as the power capabilities of the rack scale. Google shared a picture of the fourth-generation CDU, which has redundant heat exchanger pumps. The fifth generation of this CDU, codenamed Project Deschutes, will be contributed to the OCP later this year to accelerate the adoption of liquid cooling at scale!

Now, there are many details and nuances to all of this. The upcoming Mount Diablo specification will unveil more detail, and if this is close to heart for you, then I would encourage you to join the monthly OCP Power & Rack community calls to listen in or actively partake! Also, consider joining the next OCP summit, as it’s such a great place to keep a pulse on industry developments.

Rarely are hyperscalers so open about their designs and plans, and it’s a chance to connect with expert engineers and startups alike. The data center is transforming to cope with the massive disruption of AI.

While this is all very exciting, I would be remiss not to mention that the OCP community is also starting to actively track the requirements for quantum computing, which, currently, is hard to even define in a rack standard, but requires a more holistic approach and is yet another challenge for the data center to tackle in the near future!