WEKA Unveils NeuralMesh for Microsecond AI Response

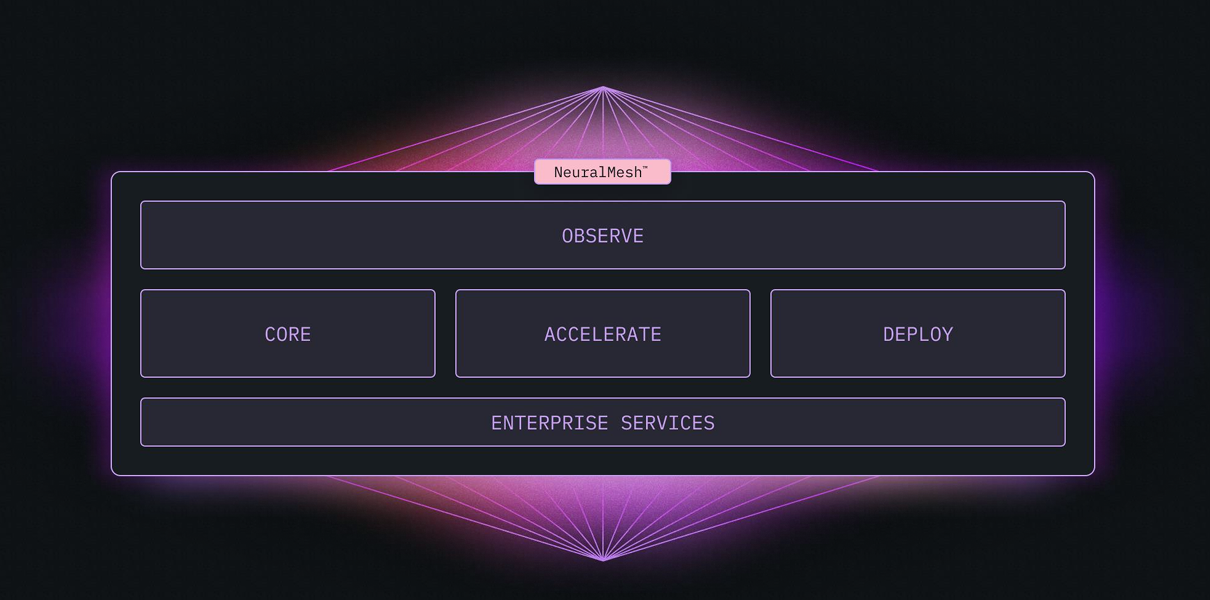

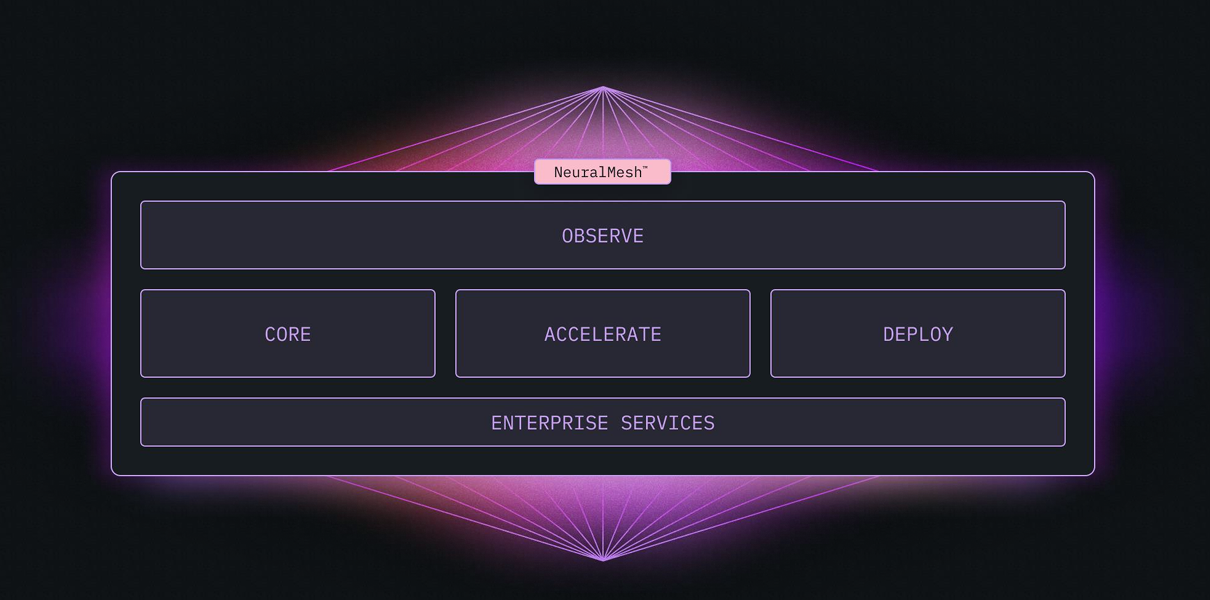

Today, AI-native data platform company WEKA launched NeuralMesh, a software-defined storage system designed to power AI applications requiring real-time responses. The mesh-based architecture delivers performance for AI workloads and scales from petabytes to exabytes while becoming more resilient as it grows.

WEKA built NeuralMesh specifically for enterprise AI and agentic AI systems that demand data access in microseconds, not milliseconds. It offers a fully containerized, mesh-based architecture to seamlessly connect data, storage, compute, and AI services.

“AI innovation continues to evolve at a blistering pace,” says Liran Zvibel, WEKA’s cofounder and CEO. “Across our customer base, we are seeing petascale customer environments growing to exabyte scale at an incomprehensible rate. The future is exascale. Regardless of where you are in your AI journey today, your data architecture must be able to adapt and scale to support this inevitability or risk falling behind.”

Traditional storage architectures slow down and become fragile as AI environments expand, forcing organizations to add costly compute and memory resources to keep up with the demands of their AI workloads. In contrast, NeuralMesh’s software-defined microservices-based architecture allows its performance to improve rather than degrade as data volumes reach exabyte scale. The company says it is the “world’s only intelligent, adaptive storage system” purpose-built for accelerating graphical processing units, tensor processing units, and AI workloads.

WEKA highlights five key breakthrough capabilities delivered by NeuralMesh:

- Microsecond data access, even with massive datasets

- Self-healing infrastructure that gets stronger with scale

- Deployment flexibility across data center, edge, cloud, hybrid, and multicloud

- Intelligent monitoring for automatic performance optimization

- Enterprise-level security without performance compromise

The solution offers benefits for AI companies looking to train models faster and deploy agents that respond quickly, hypserscale and neocloud service providers looking to expand service to more customers without also expanding infrastructure, and for enterprises that need to deploy and scale AI-ready infrastructure without overwhelming complexity.

NeuralMesh represents over a decade of development supported by more than 140 patents. WEKA hails it as a “revolutionary leap” in its data infrastructure solutions, which began with offering a parallel file system for high-performance computing and machine to and evolved to pioneering the high-performance AI data platform category in 2021.

NeuralMesh launches in limited release for enterprise and large-scale AI deployments, with general availability planned for fall 2025.

The TechArena Take

Following closely on last week’s announcement between WEKA and Nebius to deliver a GPU-as-a-Service (GPUaaS) platform, this news further demonstrates how WEKA’s commitment to innovation is solidifying its position as the high-performance storage layer for AI.

The scale here, with microsecond response times and exabyte-scale capability, is remarkable—and necessary. NeuralMesh positions enterprises to harness the full potential of agentic AI systems where split-second decisions drive competitive advantage.

With its existing product, customers have already claimed impressive results. A case study with Stability AI highlighted that by using WEKA, Stability AI achieved 93% GPU utilization during AI model training and increased their cloud storage capacity while reducing costs by 80%. We’ll be watching to see the results NeuralMesh can drive as a technological leap forward.

Today, AI-native data platform company WEKA launched NeuralMesh, a software-defined storage system designed to power AI applications requiring real-time responses. The mesh-based architecture delivers performance for AI workloads and scales from petabytes to exabytes while becoming more resilient as it grows.

WEKA built NeuralMesh specifically for enterprise AI and agentic AI systems that demand data access in microseconds, not milliseconds. It offers a fully containerized, mesh-based architecture to seamlessly connect data, storage, compute, and AI services.

“AI innovation continues to evolve at a blistering pace,” says Liran Zvibel, WEKA’s cofounder and CEO. “Across our customer base, we are seeing petascale customer environments growing to exabyte scale at an incomprehensible rate. The future is exascale. Regardless of where you are in your AI journey today, your data architecture must be able to adapt and scale to support this inevitability or risk falling behind.”

Traditional storage architectures slow down and become fragile as AI environments expand, forcing organizations to add costly compute and memory resources to keep up with the demands of their AI workloads. In contrast, NeuralMesh’s software-defined microservices-based architecture allows its performance to improve rather than degrade as data volumes reach exabyte scale. The company says it is the “world’s only intelligent, adaptive storage system” purpose-built for accelerating graphical processing units, tensor processing units, and AI workloads.

WEKA highlights five key breakthrough capabilities delivered by NeuralMesh:

- Microsecond data access, even with massive datasets

- Self-healing infrastructure that gets stronger with scale

- Deployment flexibility across data center, edge, cloud, hybrid, and multicloud

- Intelligent monitoring for automatic performance optimization

- Enterprise-level security without performance compromise

The solution offers benefits for AI companies looking to train models faster and deploy agents that respond quickly, hypserscale and neocloud service providers looking to expand service to more customers without also expanding infrastructure, and for enterprises that need to deploy and scale AI-ready infrastructure without overwhelming complexity.

NeuralMesh represents over a decade of development supported by more than 140 patents. WEKA hails it as a “revolutionary leap” in its data infrastructure solutions, which began with offering a parallel file system for high-performance computing and machine to and evolved to pioneering the high-performance AI data platform category in 2021.

NeuralMesh launches in limited release for enterprise and large-scale AI deployments, with general availability planned for fall 2025.

The TechArena Take

Following closely on last week’s announcement between WEKA and Nebius to deliver a GPU-as-a-Service (GPUaaS) platform, this news further demonstrates how WEKA’s commitment to innovation is solidifying its position as the high-performance storage layer for AI.

The scale here, with microsecond response times and exabyte-scale capability, is remarkable—and necessary. NeuralMesh positions enterprises to harness the full potential of agentic AI systems where split-second decisions drive competitive advantage.

With its existing product, customers have already claimed impressive results. A case study with Stability AI highlighted that by using WEKA, Stability AI achieved 93% GPU utilization during AI model training and increased their cloud storage capacity while reducing costs by 80%. We’ll be watching to see the results NeuralMesh can drive as a technological leap forward.